Information

The public may access information on the contents (artworks, artefacts and cultural sites) through various ways, prepared by the curators:

- Information may automatically be added in the curation, before and after, using the Title tool.

- These can also be curated as an alternative track to visual ones. Such dedicated complementary information tracks are curated to synchronise with the contents.

- A Descriptions App may accessed through its automatically generated QR code.

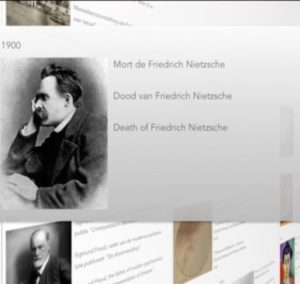

- A dedicated synced historical story line may be added upon request.

|

QR code catalogue for Chill |

14-18 Rupture or Continuity Timeline |

Parallax / 2.5D Service

Introduction

Parallax or 2.5D is a technique which consists in giving a 3D like effect to a painting, distinguishing various visual elements into various layers in this present case.

Within a 3D environment, camera effects give an impression of depth as some elements are animated at a different pace than others, as if you moved your eyes and noticed a tree in the foreground moving faster whereas the background sky is scrolling slower.

Because the various elements are placed at different depths, when the camera moves, a 3D animation-like effect is perceived.

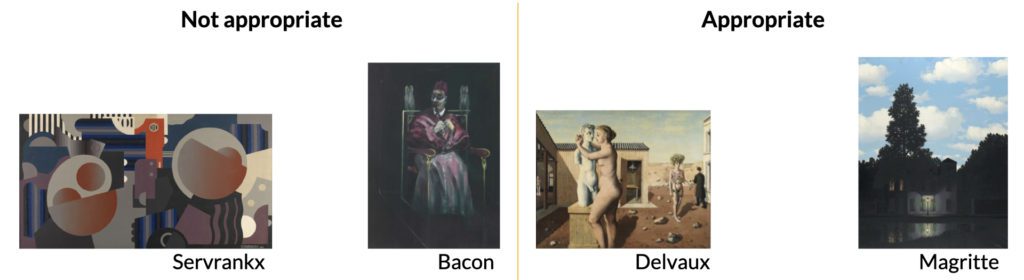

Limitations

Not all paintings or images are appropriate for parallax/2.5D. This may be due to the fact that the image’s elements are so closely interrelated (Bacon) or that creating different layers for different visual elements in a painting with no perspective (Servranckx) could be considered as altering the painting, hence requiring approval from the artist or her/his rights holders.

Here are examples of artworks which are appropriate and not:

Image formats

If producing an HD (1920 x 1080 pixels) augmentation using the 2.5D technique, the original image should be 25% to 50% more detailed. If producing a UHD or 4K (3840 x 2160 pixels) augmentation, the original image should again be 25% to 50% more detailed.

As an example, a 4K image requiring a x2 close up in its middle would require an original digitization of 7680 x 4320 pixels.

Close up levels, if exaggerated, might end up producing animations where pixels are blurred, as the source will be scattered among various pixels, with insufficient resolution.

If a certain element of a painting needs more focus, the original digitization size should have the capacity of that given focus, depending on the close-up level desired.

In other words, when the camera zooms in, the pixels get bigger and some blur happens if the resolution of the original image is not big enough. The original image must have a resolution big enough so that at the maximum zoom level of the movie, the pixels of the movie are still bigger than the ones of the original image. For an HD movie of 1920×1080 and a zoom factor of x2, the original image must be at least 3840×2160.

In our case, production and broadcasts were done in landscape mode, which is a common screen orientation. Briefs augmenting artworks should be indicated with this format in mind.

It is also possible to work in portrait mode with this format indicated on PC player display(s).

The image sources must be in RGB and not CMYK. If in the later, which is used for printing, the image should be transformed into RGB.

Creating the layers

The first step for 2.5D production is to separate the artwork in distinctive layers. This is done in an image manipulation program. The objectives of the augmentation should remain clear. It is not possible to separate all elements. Produced elements should be logical so as to represent their natural depth. For instance, shadows should be associated with their main element. There can be one or eventually two elements within a group (i.e. statue + shadow composed of statue and shadow layers).

WallMuse Production Services uses Adobe Photoshop, which has a number of interesting tools.

The process consists in separating the foreground elements individually into a separate layer. It is a detailed exercise and “intelligent scissors” may be helpful, although their precision must be adequate.

All layers behind a given layer must be completed so that when an element in front is visually moved by the camera, it does not film empty parts. In a simple example with a statue on a red soil with surrounding buildings and sky, the camera movement will perceive at moments parts that do not exist. We used a Photoshop “Patch Tool” to add new parts, based on other surrounding existing parts.

This is a trial and error approach. Generally, 5% of the inner parts of the following layer needs to be patched. It is a very sensitive exercise, so we must improve or extend some “patches” as and when needed.

Avoid altering the original painting when motion making

Animating a painting can bring it to light and make it more dynamic, but this must be in line with the original artwork, unless it is a choice made by the artist him or herself.

WallMuse will integrate these into its Player directly for SHAREX members as a service.

The alteration of the image achieved thanks to the layers and the camera can be checked against the original at various stages of the animation process. Both the beginning and the end of the animation will concur with the original image, so that no major differences can be noted during the camera movement.

Low resolution example with Pygmalion, 1939 Paul Delvaux

3D interactive videos

Context

The methodology enables to restitute 3D videos of sculptures, installations, cultural sites. Interactiveness enables end users to navigate up, down, left or right, the WallMuse’s language pointing to different tracks, playing forward and backwards.

Interactive or not, scenario preparation are submitted for validation and might include draft versions. 3D modelling may also be used for optimal scenario preparation. RTK navigation is recommended for cm precision flight plans.

Captures

WallMuse is an authorised drone operator in Europe and is member of 3 networks. We have used various configurations, including 360, 4K cameras restituting HD format, and more recently 6K cameras.

Interactiveness is prepared through for Smartphones, Microsoft Kinect as well as IoT devices.

Example of a 3D Restitution

Multiple synched captures including 360

Diptychs, triptychs and panoramas

Such captures have different uses:

- Optimal scenario preparation with 3D captures: in post-production, optimal views may be found aligning for instance movements with sound tracks. The can serve as a draft for higher-quality cameras to replicate.

- Diptych or triptych captures can also be elaborated, either to be used as drafts or to be enhanced through AI video enhancing programs.

- By providing a 360 track, that can be interacted with in HTML players or by letting users adjust complementary synched views for a cluster of devices and displays.

- Professional higher resolution cameras are used for optimal captures.

Light Lapses

Light lapses may be useful to capture effects of light during:

- different moments of the day, from dusk to dawn,

- different moments of the year, for instance spring, summer, autumn and winter.

Particularly valuable for stained glass, outside installations and cultural sites, restitutions can also become interactive. The techniques we use include:https://vimeo.com/584226243/e4538085ca

- Time-lapse using photo captures through tripod, moving robots and drone cameras

- Hyper-lapse using video captures through same techniques

Performance Restitutions

Main competencies:

- Multiple full frame setups, using tall tripods

- Use of very high resolution 360 cameras

- One or many mobile cameras, using gimbals, cranes or dollies

- Multiple track audio recordings

- Augmentations where appropriate

3D and Video Integrations Using AI for Immersive Productions

In the film industry, game and film productions often operate independently, even when exploring the same subject. At WallMuse, we aim to bridge these workflows in the realm of art and cultural restitutions. Our goal is to interconnect these processes to optimize multiple complementary output types, enhancing the overall user experience.

By leveraging AI and cutting-edge technologies, we seek to redefine how immersive productions are created and delivered, offering innovative solutions tailored to diverse cultural and artistic contexts.

AI generative translations services, especially for video captations

At WallMuse, we aim to integrate AI generative translation tools that respect and value the expertise of professional translators, ensuring all sources and contributions are transparently acknowledged.

How It Works:

Leveraging Expertise

Past translations will be used to refine AI models, incorporating the knowledge and craft of professional translators.

Real-Time Support

Current translators can utilise the tool in an agile, “on-the-fly” approach, providing their expertise while receiving automated suggestions to streamline and refine the translation process in real-time.

Expanding Applications

Available as a Tool within the Curation Planner, this solution will adapt to various artistic and cultural practices. Translators can actively contribute, while the tool can also generate custom automatic translations for creative contexts such as opera, poetry, and other cultural content.

Our Commitment

We believe in collaboration between AI and human expertise, ensuring high-quality translations that preserve the artistic and cultural integrity of the content. By valuing transparency and professionalism, we aim to create a supportive environment where translators and technology work together seamlessly.